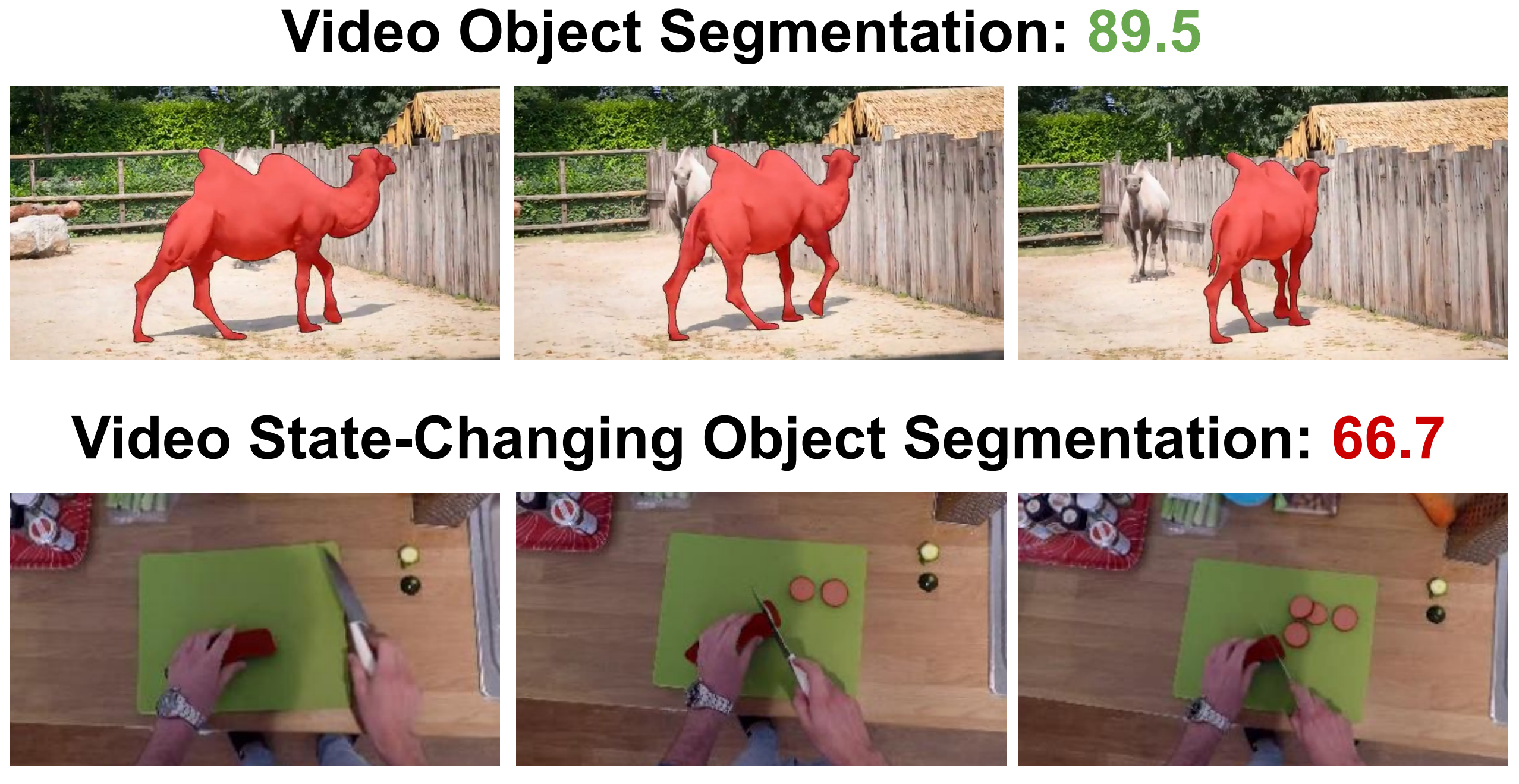

Daily objects commonly experience state changes. For example, slicing a cucumber changes its state from whole to sliced. Learning about object state changes in Video Object Segmentation (VOS) is crucial for understanding and interacting with the visual world. Conventional VOS benchmarks do not consider this challenging yet crucial problem. This paper makes a pioneering effort to introduce a weakly-supervised benchmark on Video State-Changing Object Segmentation (VSCOS). We construct our VSCOS benchmark by selecting state-changing videos from existing datasets. In advocate of an annotation-efficient approach towards state-changing object segmentation, we only annotate the first and last frames of training videos, which is different from conventional VOS. Notably, an open-vocabulary setting is included to evaluate the generalization to novel types of objects or state changes. We empirically illustrate that state-of-the-art VOS models struggle with state-changing objects and lose track after the state changes. We analyze the main difficulties of our VSCOS task and identify three technical improvements, namely, fine-tuning strategies, representation learning, and integrating motion information. Applying these improvements results in a strong baseline for segmenting state-changing objects consistently.

@InProceedings{Yu_2023_ICCV,

author = {Yu, Jiangwei and Li, Xiang and Zhao, Xinran and Zhang, Hongming and Wang, Yu-Xiong},

title = {Video State-Changing Object Segmentation},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023},

pages = {20439-20448}

}